Performant Isolation for Secure AI Agents

AI agents are revolutionizing how we handle complex, multi-stage processes, from triaging emails to responding to customer inquiries. Their power lies in their autonomy and ability to perform a series of interconnected tasks. But with this power comes a critical question: what happens when an agent misbehaves?

To address this new security risk, we explored the feasibility of running complex agentic systems within Edera’s isolated zones. The full details of this exploration are in the Performant Isolation for Agentic AI report.

Running AI agents directly on a shared machine or within shared-kernel container environments, such as Docker, poses substantial security risks. AI agents can inadvertently or maliciously run harmful commands, whether executing self-generated code, responding to external instructions, or influenced by indirect prompt injections can inadvertently or maliciously run harmful commands. If such an agent gains access to the host file system, it could exfiltrate sensitive data like API keys or credentials. Likewise, if it reads system or application logs, it may unintentionally or deliberately expose confidential information, potentially leading to remote code execution or data compromise.

We face a balancing act: we need to give agents enough autonomy to complete complex tasks, but we must prevent them from becoming the weakest link in our systems.

To address the risks of agents gaining full access to an execution environment, we propose using Edera to achieve strong security guarantees with minimal limits to the agent’s capabilities.

Edera provides strong isolation for workloads, allowing each single-purpose agent to run in a fully isolated Edera zone.

How Edera Secures Agentic AI with Strong Isolation

Isolated Execution Environments for Each AI Agent

Each agent operates in its own isolated Edera zone without access to the host operating system or file system.

Controlled Input and Output

Between Agents inputs and outputs are tightly isolated, ensuring one agent cannot influence or alter another agent’s behavior. This containment prevents prompt injections or manipulated outputs from propagating across agents.

Minimal, Explicit Data Access for Agent Tasks

Each agent only has access to the data that is directly passed into its zone. If an agent needs to operate on files, the files are passed into that agent’s zone. Any changes are not persisted until the files are passed back to the host machine as output.

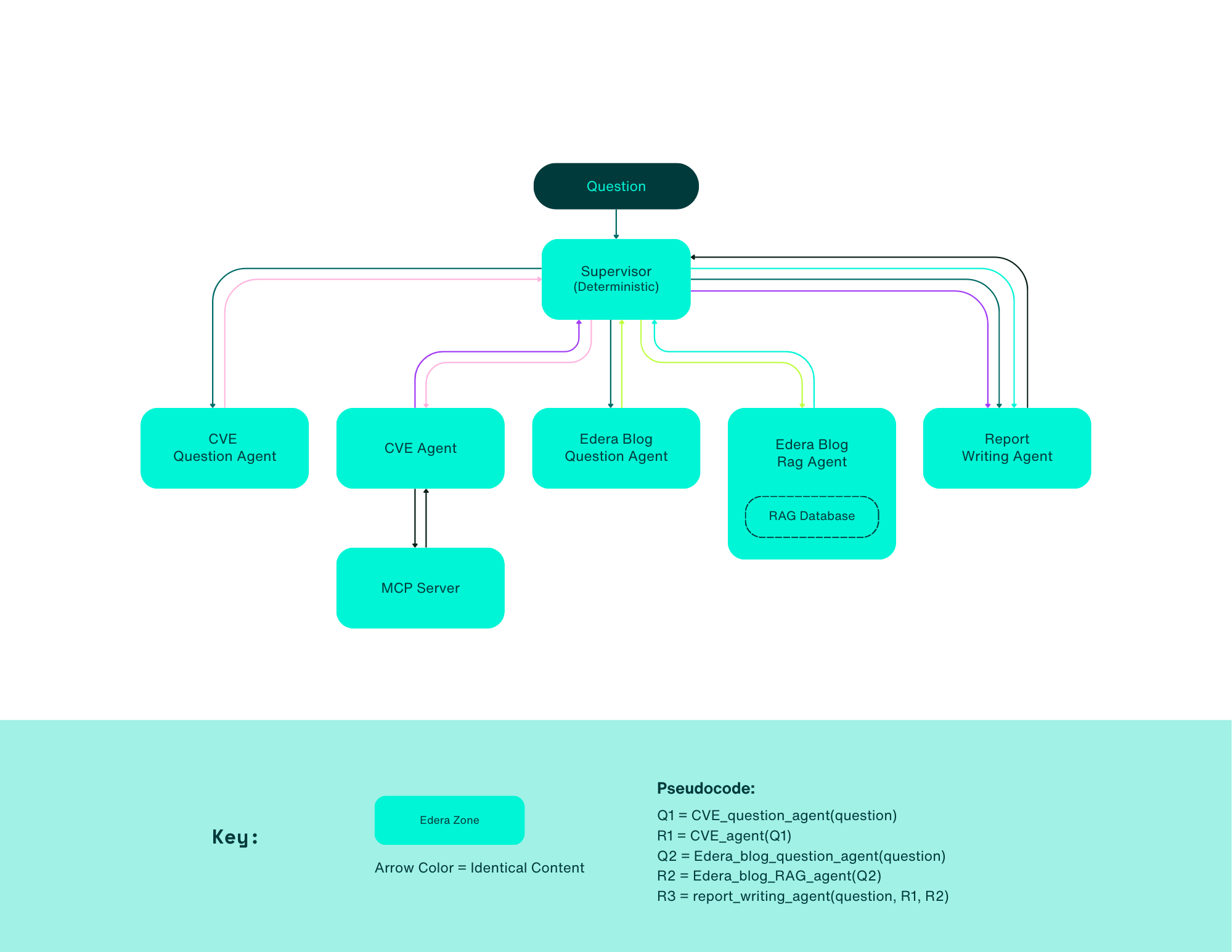

In the report, we explore how this architecture in Edera compares to using traditional Docker containers in terms of performance and complexity. We constructed a sample architecture that uses secure agent design patterns, Model Context Protocol (MCP), and Retrieval Augmented Generation (RAG) and implemented it in both Edera and Docker.

The results were compelling: not only was it feasible to securely orchestrate multi-stage agents in Edera, but our implementation demonstrated that running agents in Edera can actually be faster than running them in Docker.

This work validates that security for AI agents doesn’t have to come with a performance penalty. By strategically combining secure agent design patterns with the strong isolation provided by Edera, we can build robust, fast, and secure AI applications.

Want to learn more? Read the full report here. You can also watch Dan Fernandez’s presentation: The Paranoid's Guide to Deploying Skynet's Interns.

FAQs

What security risks do AI agents introduce?

AI agents can execute self-generated code, process untrusted inputs, and access sensitive data, making isolation critical to prevent data leakage and system compromise.

Why are containers insufficient for agent isolation?

Traditional containers share a kernel, meaning a compromised agent can potentially access host resources or other workloads.

How does Edera secure agentic AI?

Edera runs each agent in an isolated zone with no shared kernel, tightly controlling data access while maintaining near-native performance.

-3.png)